Grafana Benchmark

(5 min)

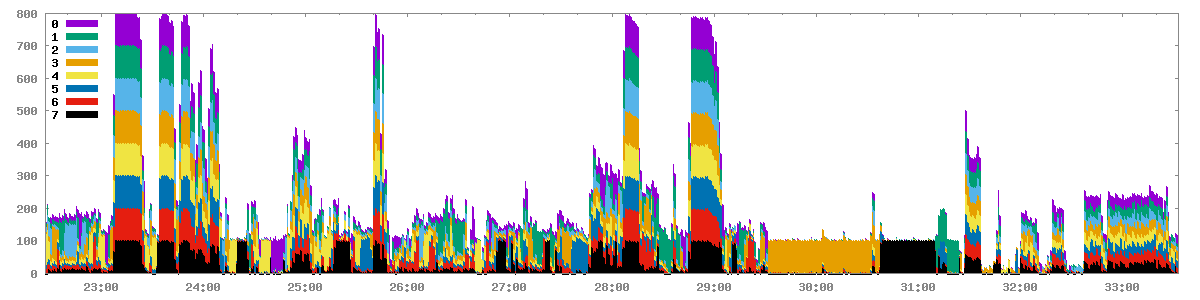

Although we have explained the reasoning behind our Intelligent Cache and the need for faster cores, there is nothing quite like seeing the result.

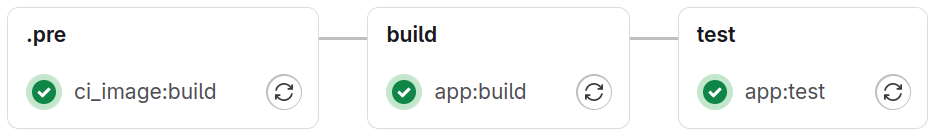

Rather than use one of our internal verification projects or build a facsimile of a complex project, we benchmarked Grafana. Grafana provides enough complexity to demonstrate a real world caching bottleneck while also utilizing two languages and a container build.

While Grafana does not utilize Gitlab CI, we used the existing CI definitions as a guide to build a simple, but realistic Gitlab CI setup. The result was 6-9X faster than Gitlab.com.